The Board’s Role in AI Readiness: Why Oversight Must Catch Up to Exposure

- David Camacho

- May 8

- 4 min read

Updated: Jun 24

If AI is just “on the roadmap", your board is already falling behind

AI is in your board packs. Budgets are increasing. Pilot projects are underway.

But if you think that means your organisation is AI-ready, think again.

Your teams are already using AI: often with no oversight. Sensitive data is entering public systems. Regulators are shifting from curiosity to scrutiny. And meanwhile, 70% of AI initiatives globally are failing to deliver value.

That’s not a technology failure.

It’s a leadership one.

And it starts in the boardroom.

The Oversight Gap You Can’t Ignore

AI is being discussed more at board level: 84% more board reports now mention AI. But only 31% of companies have disclosed any formal board oversight, and just 11% assign AI responsibility to a specific committee.

Even fewer have directors with actual AI fluency: just 1 in 5.

Meanwhile:

75% of employees are already using AI at work

78% bring in their own tools without formal approval

Fewer than half of companies have an AI policy in place

And under New Zealand’s Privacy Act 2020, many may already be non-compliant due to data shared with public AI tools.

This isn’t a future challenge. It’s a current risk: and most boards are flying blind.

The Five Domains of AI Governance Risk

AI is not an “IT issue”. It sits across every key board responsibility. Here’s where the exposure lives:

1. Compliance Risk

The Privacy Act 2020 requires that all personal data including employee and customer information be handled with care. If this data is pasted into ChatGPT, Gemini, or similar public tools without protections, you may already be at risk of non-compliance.

2. Data Risk

Your organisation’s IP, strategy documents, or internal communications may be leaking out via AI tools. Shadow AI: unsanctioned use of AI by staff, is rampant, and directors rarely see the full extent.

3. Financial Risk

Boards are approving AI spend without the capability to assess ROI. That’s why 70% of AI projects fail to deliver value. Unaligned pilots. Disconnected investments. No path to scale. You wouldn’t sign off a capital project without a business case, so why treat AI differently?

4. People Risk

When there’s no guidance, staff experiment in secret. That erodes trust, undermines learning, and kills momentum. Even worse, your best talent may be operating outside the system, or leaving entirely if they feel unsupported.

5. Strategic Risk

AI isn’t just an efficiency tool. It’s a strategic capability.

Boards that fail to align AI investments with business goals risk falling behind more adaptive, more capable competitors.

Boards Are Focusing on Tools: Not Readiness

This is the core disconnect.

Organisations keep chasing tools, vendors, and pilots, while ignoring the missing foundations:

Do we have the skills?

Are risks being managed?

Is there internal trust?

Do we know what’s actually happening across teams?

Until those are answered, you’re not investing, you’re rolling the dice.

The B-O-A-R-D Oversight Model

To move from passive to proactive, we recommend a simple five-part model:

B — Build Baseline Understanding

Boards don’t need to write prompts, but they do need to interrogate assumptions and plans. Request tailored briefings. Build literacy. Make AI part of your regular agenda.

O — Oversee Policy and Risk

Ask for a Shadow AI audit. Review whether data risks, acceptable use, and Privacy Act obligations are being met. If this were cybersecurity, you’d already be doing it.

A — Align With Strategic Objectives

Demand clarity on how AI connects to the organisation’s actual goals. Push back on disconnected projects. Ensure capability is built before scaling tools.

R — Review Readiness Regularly

Run quarterly readiness reviews. Ask for maturity snapshots. Track actual adoption, not just policy intent.

D — Develop Director Capability Offer structured AI training to directors. Bring in advisors. Add expertise to your board or advisory panel. AI fluency is now a governance asset.

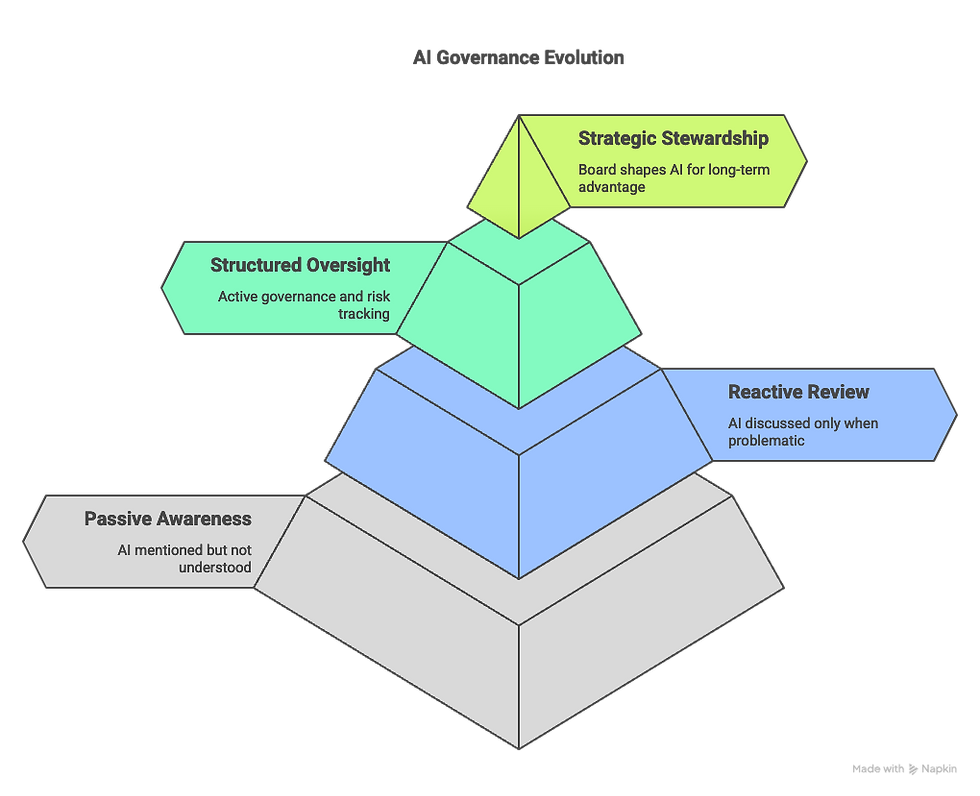

Board AI Maturity Ladder

Where is your board now, and where would a regulator place you?

Your 30-Day Action Plan

Within 7 days: Commission a Shadow AI Audit

Get a clear map of actual AI use, exposure points, and risk.

Within 14 days: Book a Board AI Briefing

Align your directors on risk, opportunity, and legal obligations.

Within 30 days: Assign Oversight Responsibility Formalise which committee or director owns AI governance moving forward.

This Isn’t Optional Anymore

Your staff are already using AI. Your competitors are building capability. Your board is now accountable, for oversight, risk, and strategic direction.

And if 70% of projects are still failing to deliver ROI, then the real question is this:

Will your board lead AI transformation, or audit it after the damage is done? (This is your moment. Choose leadership.)

→ Book a 15-minute AI Governance Briefing or request our Board Readiness Starter Pack. We’ll show you where you stand, and what to do next.

Information provided is general in nature and does not constitute legal advice.

Comments